I originally published this post on Medium. I copied it here in May 2024 to keep it alongside my other content.

Last week there was hubbub about what the Raspberry Pi folks did on social media, posting from their own Mastodon instance, and how other Mastodon instance moderators sorta-kinda banded together to “cancel” the brand across large swaths of the Fediverse. It’s an object lesson in how hosting social media spaces requires thicker skin, slower reflexes, and more experience than neophyte Mastodon operators can muster (so far).

What happened?

I first saw the news when Jeff Jarvis shared a link to this post: A Case Study on Raspberry Pi’s Incident on the Fediverse. The post recounts a bit of what happened, but the short version is: Raspberry Pi hired a cop who talked about what he did with Pi hardware in prior covert surveillance work, and this outraged users interested in privacy and skeptical of law enforcement.

When the angry folks brought the torches and pitchforks, the social media folks at Pi reacted… well, let’s say humanly (which can be very bad for a brand). They were openly rude to several people (who were rude to them first). In reaction, some Mastodon instance operators started to block the Raspberry Pi instance (either for rudeness or privacy or law enforcement reasons), thereby cutting off their users from seeing anything from the Raspberry Pi Mastodon server. Banning Pi became a bit of a group sport (although given the federated nature of Mastodon, it’s hard to tell how wide this effort went).

The post recounts the events in more detail, with links, but then it jumps to a list of recommendations aimed at social media practitioners, warning brands to be careful because the Fediverse can more or less lock out badly-behaving brands, potentially on a hair trigger and without recourse.

Brands need to draft twice, post once

It’s always good to remind brands they need to behave with generosity, grace, kindness, and default to forgiveness of others’ bad behavior. Acting indignant with users (some of whom are customers) doesn’t usually end well. The recommendations from post author Aurynn Shaw are welcome. Aside from general good behavior, brands also need to intimately understand the federated model Mastodon uses, especially if they want to run their own Mastodon instances, releasing their social media content directly into the Fediverse.

Federated = (no one is in charge) x (everyone is in charge)

There’s a key power structure difference with Mastodon. Yes, anyone can broadcast in the general direction of the Fediverse, and users on other instances can listen in, but each Mastodon instance operator — often representing hundreds or even thousands of users—gets to decide whether all their users can or can’t see any other Mastodon source, for any reason. This means there’s not one content moderator in charge (like Elon Musk) for all of Mastodon, but hundreds of moderators, many of whom are hobbyists or neophytes with scant idea how messy content moderation and community management can be. They may even see blocking “bad actors” as a badge of honor they can wear at the next culture war skirmish.

On Facebook, Twitter, YouTube, and other centralized platforms, there are content moderation rules, centrally administered by paid professionals (although sometimes I wonder). So long as you don’t violate their rules, post away! If you do violate their rules (even by mistake) and you get blocked, you can appeal to the platform operator, maybe apologize, and get back in action. Sure, some users will unfollow you after your public oopsie, but you’re not “canceled” on the platform without recourse.

Meanwhile on the Fediverse, if a Mastodon instance operator decides you crossed an invisible line, poof — you’re canceled for all their users in one shot.

But it gets worse. Once a Mastodon instance operator marks your entire instance as a bad source, there’s no easy path to get off their block list. If there were just 10 instance operators in the world, you could conceivably appeal personally to each one. But with new Mastodon deployments firing up every day, and millions of users flowing in, it’s effectively impossible to find or contact all of the moderators, to say nothing of jumping through whatever hoops each site admin would ask you to jump through.

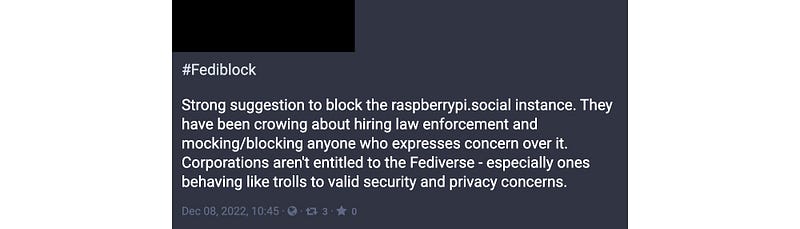

And it gets worse still! Mastodon instance operators share info, especially when it comes to blocking sources. For example, lots of operators block known sources of porn or things that are patently illegal. It’s like sharing virus signatures (although way more manual, for now). So if a source like Raspberry Pi “misbehaves,” their source Mastodon instance might end up on a #fediblock list shared across admin groups, perhaps implemented as a new blocker without any additional thought. Busy volunteer Mastodon admins are just people — they can use their own sense of personal outrage, solidarity with other admins, or just a lack of sufficient time and end up following everyone else in a witch hunt.

So… an individual Mastodon user can follow or unfollow others, just like on Twitter. But they are also beholden to the choices of their Mastodon instance operator. If you’re okay with what Raspberry Pi did, but your instance operator is not… the instance operator wins and Raspberry Pi is “canceled” for you.

Recommendation for Mastodon admins: Toughen up, buttercup

Sadly, all the recommendations in the post about this Pi incident were aimed at social media brand managers. There were no recommendations for Mastodon instance operators, each of whom act as their own content moderators, on behalf of all users on their instance. And those are the folks that need the most training in this case. (Brands already know they need good social media managers.)

If you’re running a Mastodon instance for “average” users, rather than front-line culture warriors, you’re going to have to let a lot of stuff slide, and let each user decide who they wish to follow and avoid.

Consider the hypothetical average user. They want to use Mastodon to do a little social stuff online and are statistically pretty middle-of-the-road politically. Maybe the Pi “incident” made them mad. Maybe glad. Maybe it raised an eyebrow. Or most likely it didn’t, because they didn’t even hear about it. But if you take away their ability to see any brand that ever makes a mistake, pretty soon they won’t be able to follow any brands at all.

So Mastodon admins… Unless your instance is called “wokeistan.social” or “magamania.social” you need to think twice about how you moderate away “objectionable” content that isn’t actually misinformation, illegal, an incitement to violence or hate, or broadly offensive to general user populations (like porn). BuzzFeed easily found folks not offended by the Raspberry Pi situation. But those un-offended folks might lose access to updates from the Pi guys because their Mastodon admin took offense for them and cut off their access.

Moderation isn’t new. It also isn’t easy.

TikTok, YouTube, pre-Musk Twitter, and Facebook have been navigating this moderation slippery slope for years (with mixed success). So take some lessons from them:

- go ahead and block the obviously bad stuff: porn, malware, scams, deliberate misinformation, 4chan-style hate speech and violence, etc.

- withhold hair-trigger reactions or decisions about content / don’t join outrage bandwagons

- make your content policies / strategies / thoughts clear before people join your instance, and stick to your approach

- monitor #fediblock lists or other sources for blocking targets, but make your own blocking decisions, consistent with your content approach

- create a clear, easy, appeals process aimed at your instance users, so they can ask to get banned Fediverse sources un-banned

- scale your funding and staffing to sustain all the above

And if you want a succinct (and hilarious) look at how to get started with content moderation, check out this Techdirt post: Hey Elon: Let Me Help You Speed Run The Content Moderation Learning Curve.

Bottom line: Slow down, deep breaths, let it go

As Mastodon has ramped up, it’s primarily become a left-wing kinda place, as folks decamp Musk’s altered Twitter. As such, there’s a strong woke tendency. Combine that with inexperienced and overwhelmed admins and it can feel like blocking a company behaving in boorish ways is a reasonable thing to do.

My take: No it’s not.

Discover more from digitalpolity.com

Subscribe to get the latest posts sent to your email.